...

testsuites.DriverInfo{

Name: "csi-nfsplugin",

MaxFileSize: testpatterns.FileSizeLarge,

SupportedFsType: sets.NewString(

"",

),

Capabilities: map[testsuites.Capability]bool{

testsuites.CapPersistence: true,

testsuites.CapExec: true,

},

}

...Kubernetes cluster

Testing Performance and Scalability

Evgenii Frikin

Huawei

1.0.0

2021-05-30

About me

Agenda

| Introduction Problem Scalability testing Workload testing Solution Overview Compare Deploy Test Measurements Compare of results Experience |

|

Introduction

Introduction

|

Introduction

| One of the most important aspects of Kubernetes is its scalability and performance characteristic. As Kubernetes user, administrator or operator of a cluster we would expect to have some guarantees in those areas What Kubernetes guarantees? — Kubernetes Team |

Introduction

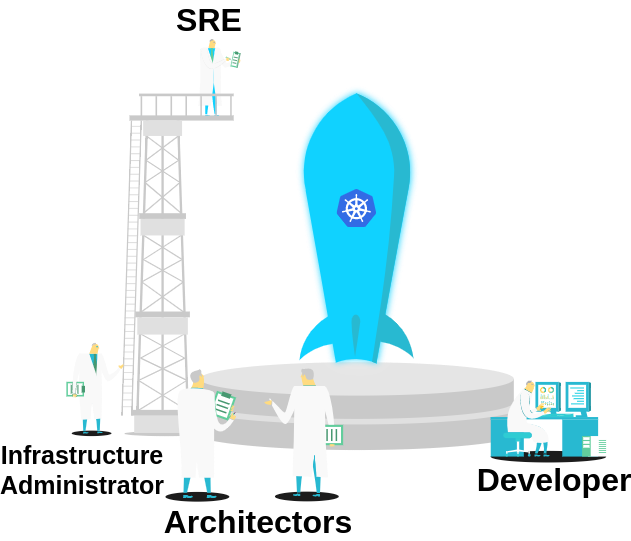

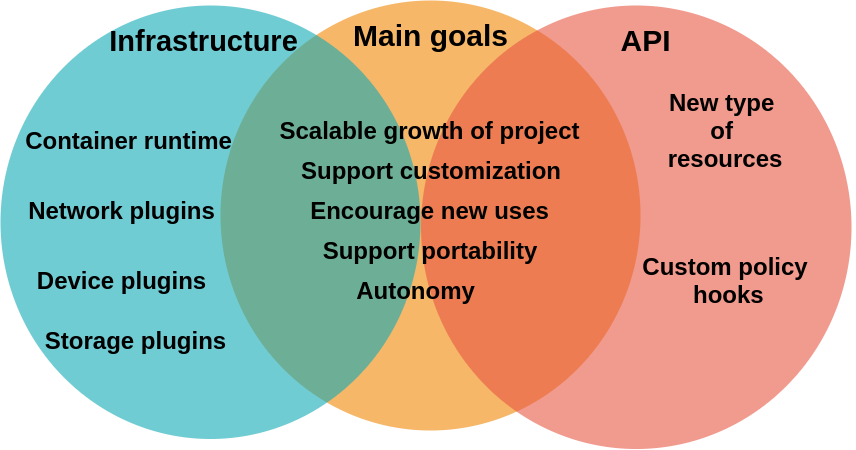

Developers

New features in Kubernetes (e.g Scheduler or API)

Outside of Kubernetes (e.g CNI, CSI or k8s Operator)

Introduction

Administrator/Operator

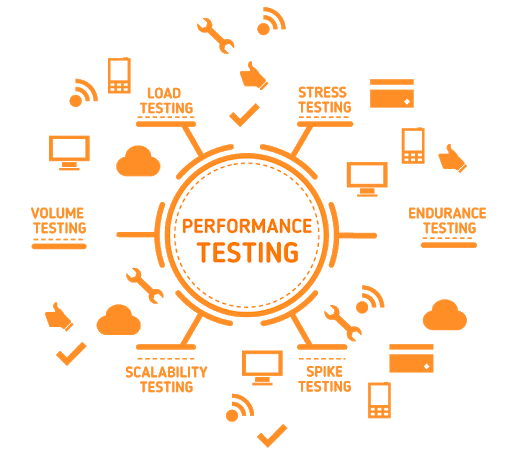

Performance

Scalability

Stability

Introduction

Architect

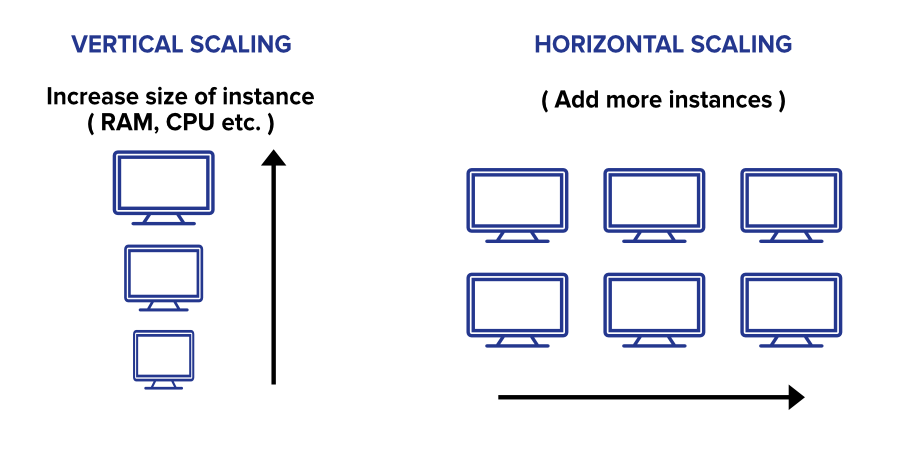

Capacity planning

Cost calculation

Scalability architecture

Introduction

|

Introduction

Introduction

Introduction

General problems

General problems

|

General problems

|

General problems

|

General problems

|

Scalability testing problems

Scalability testing problems

Spawning large-clusters is expensive and time-consuming

|

Scalability testing problems

Need to do once for each release Kubernetes or it components

|

Scalability testing problems

Need a light-weight mechanism for fast deploy k8s cluster

|

Workload testing problems

Workload testing problems

Unfriendly for users

|

Workload testing problems

Most components that are developed outside of Kubernetes

|

Workload testing problems

Golang test definition example

| YAML test definition example

|

ginkgo -p -focus='External.Storage.*csi-hostpath' -skip='\[Feature:\|\[Disruptive\]' \

e2e.test -- -storage.testdriver=/tmp/hostpath-testdriver.yaml

Scalability testing solutions

Scalability testing solutions

|

|

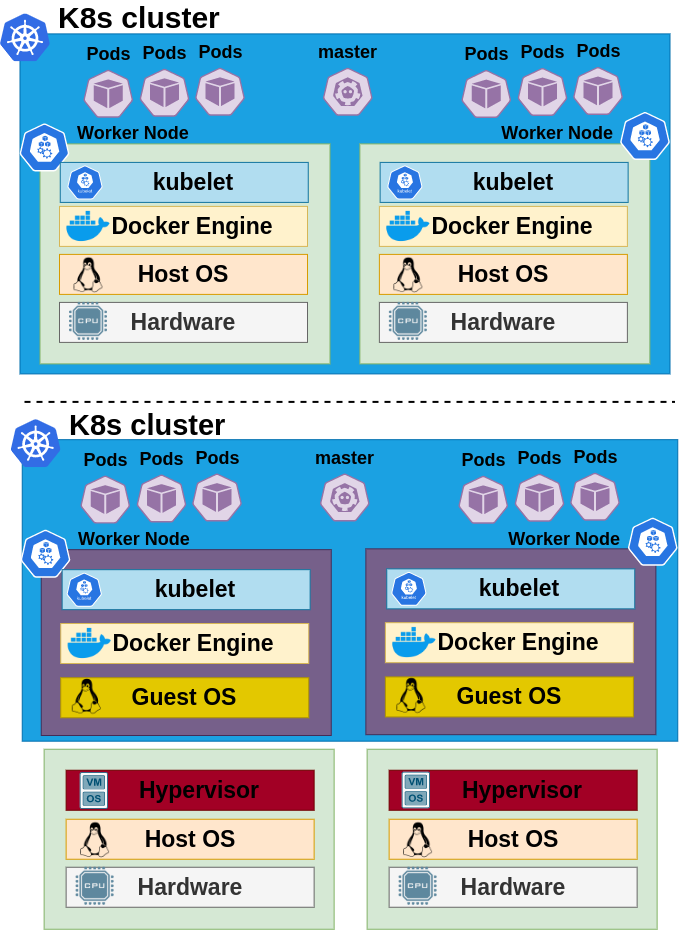

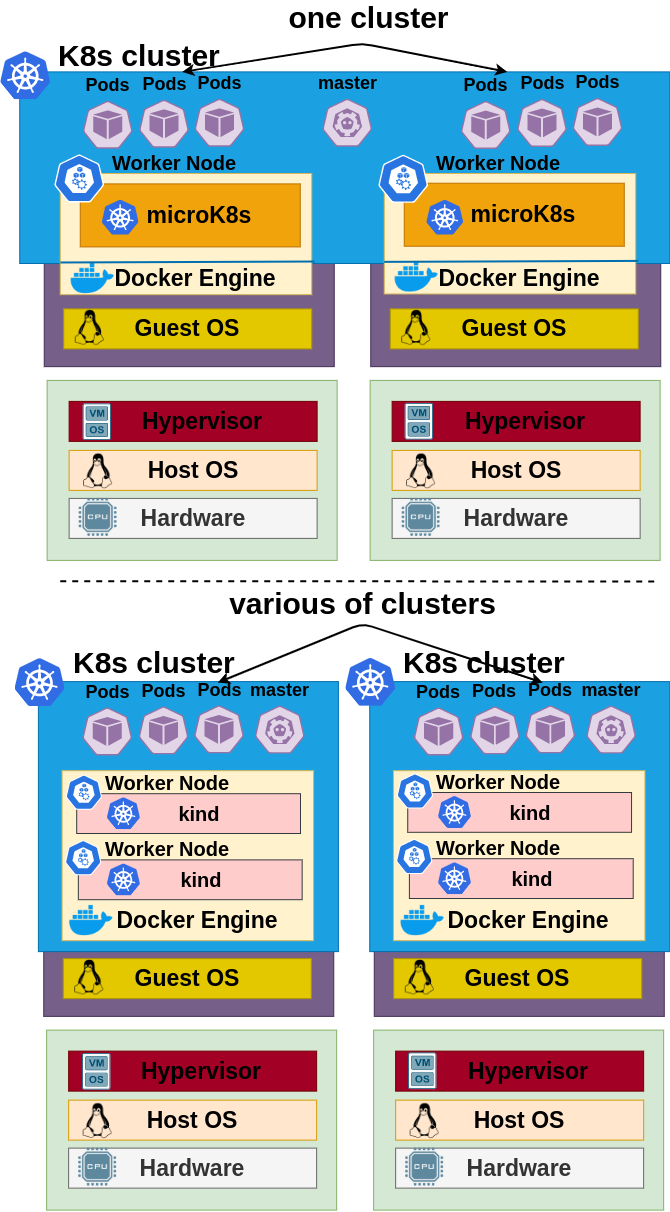

Scalability testing solutions

|

|

Scalability testing solutions

|

|

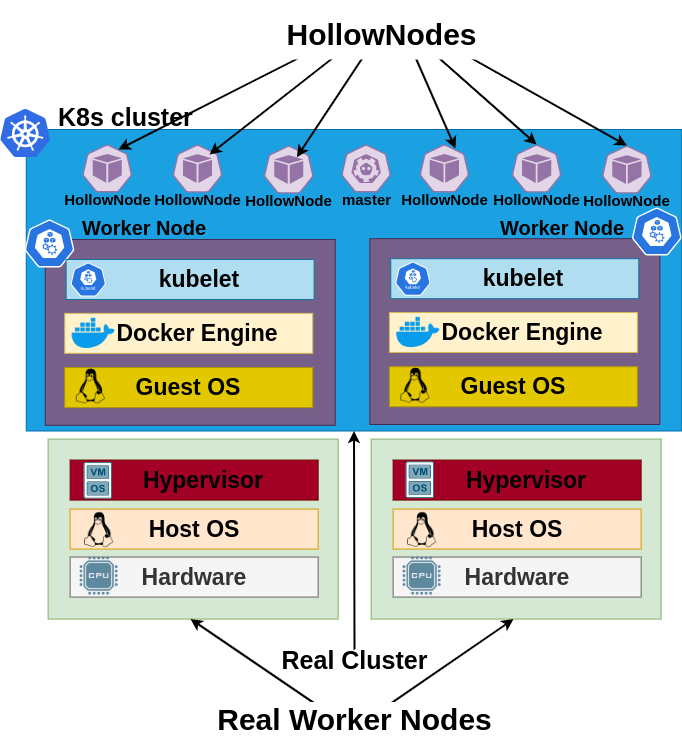

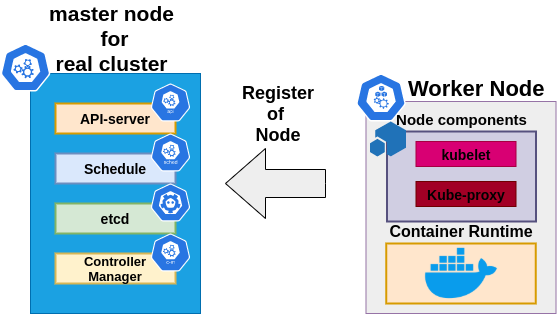

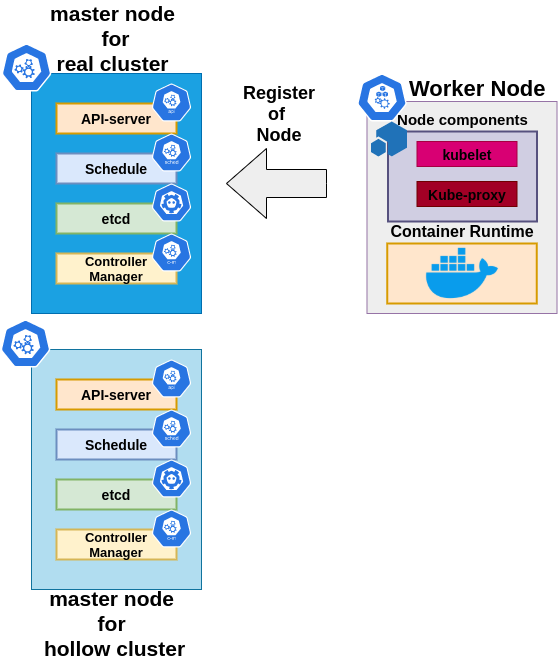

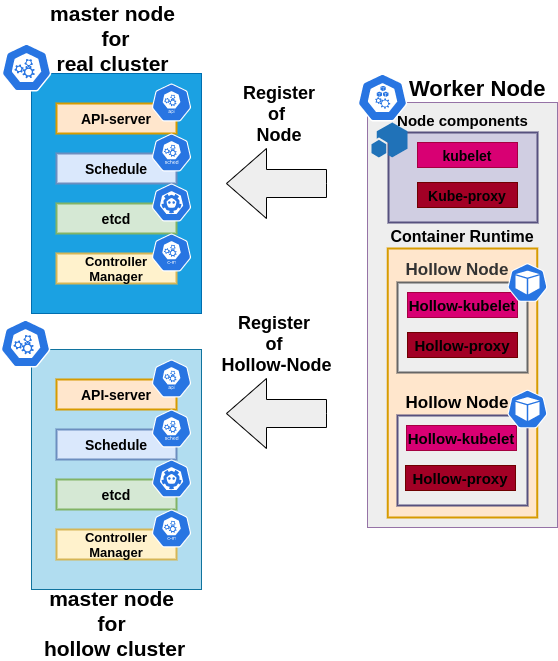

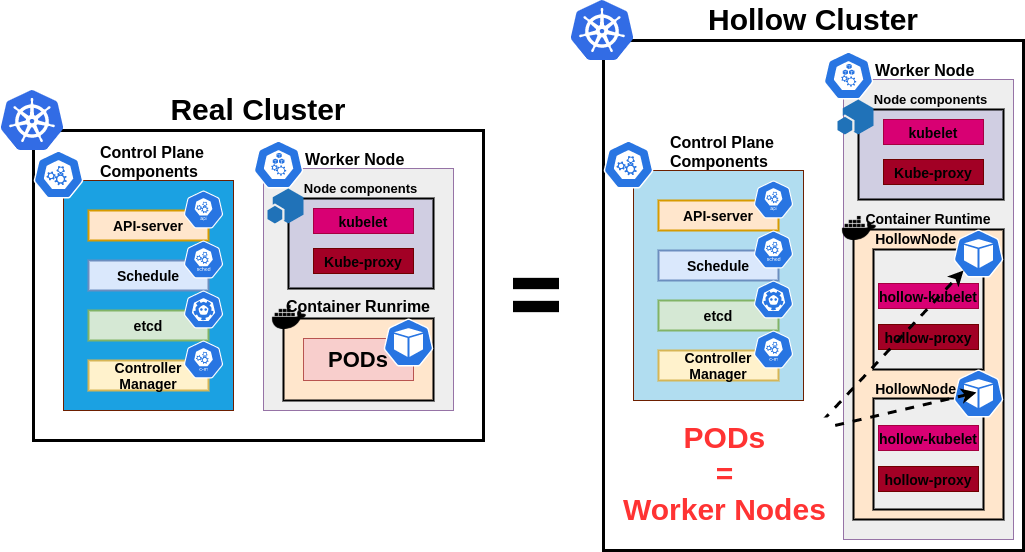

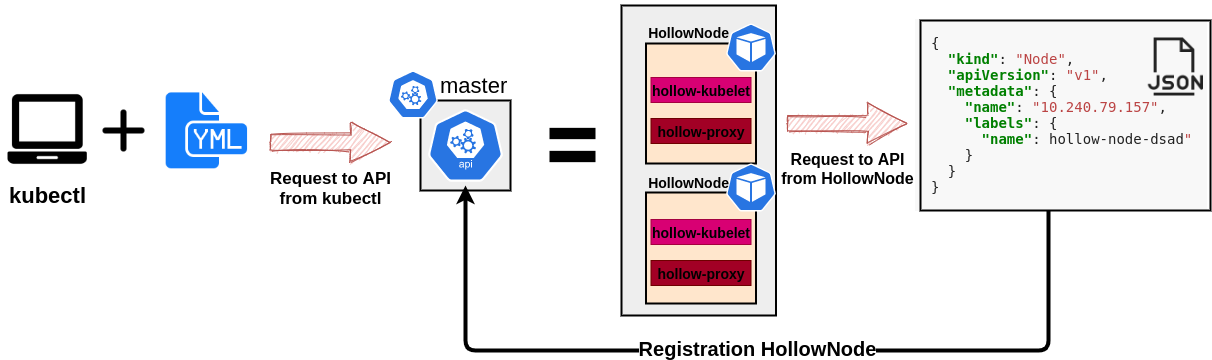

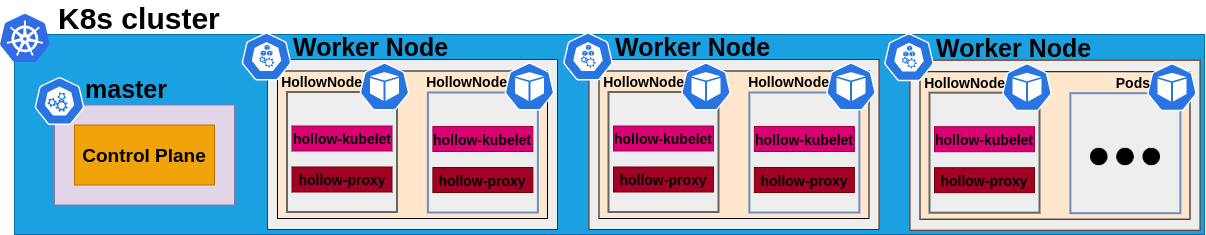

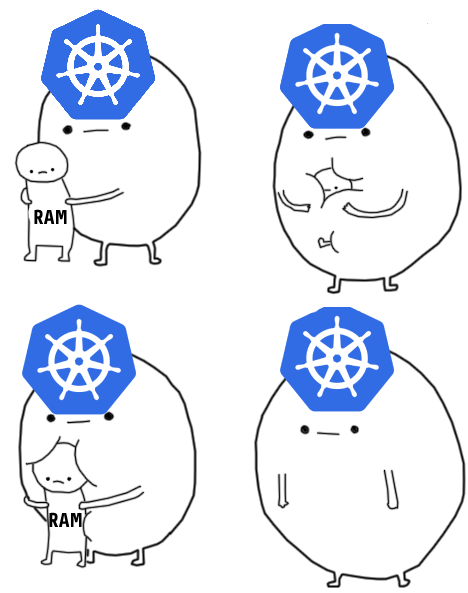

Why kubemark?

Kubemark is a performance testing tool which allows users to do experiments on simulated clusters.

— Kubernetes blog

Why kubemark?

|

|

Why kubemark?

|

|

Why kubemark?

|

|

Why kubemark?

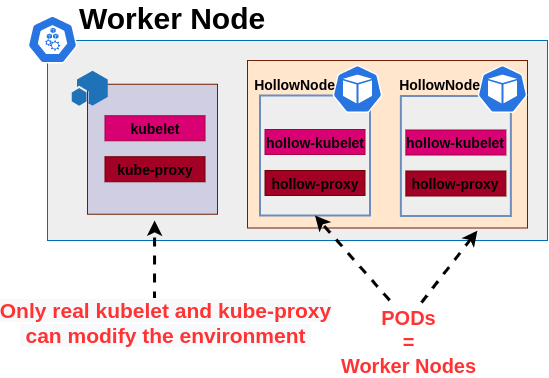

Hollow cluster = real cluster

Why kubemark?

Capability to run many instances on a single host

HollowNode doesn’t modify the environment in which it is run

Why kubemark?

Cheap scale tests

~100 HollowNodes per core (~10 millicores and 10MB RAM per pod)

Simulated cluster = Deploy time of a real cluster + Deploy time of HollowNodes

kubectl tool used for all operations scaling operations

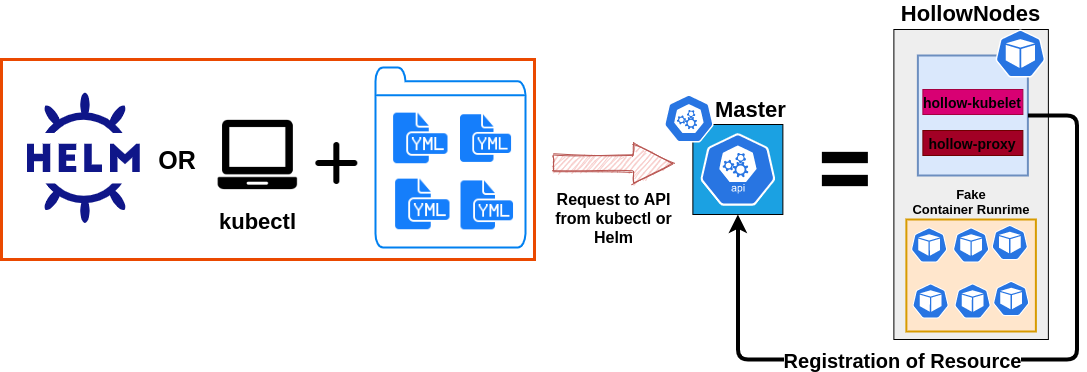

Workload testing solutions

helm or kubectl apply -f + some YAML files

Workload testing solutions

helm or kubectl apply -f + some YAML files

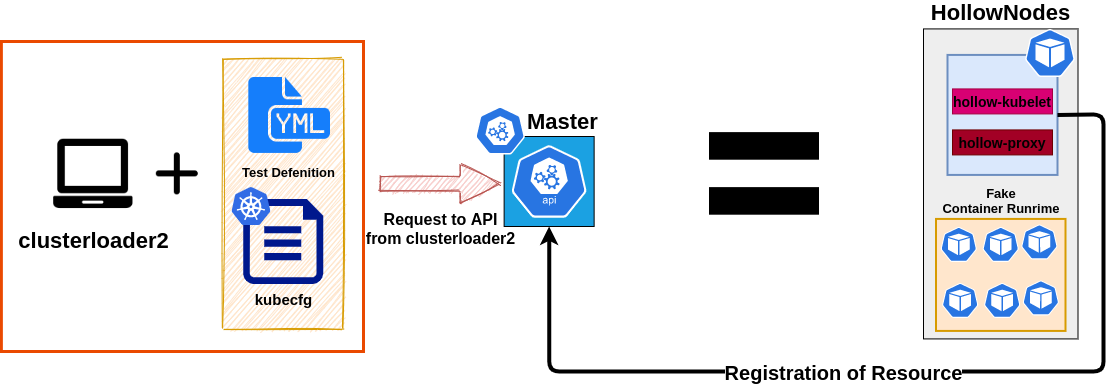

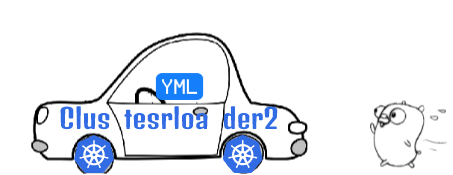

clusterloader2

Clusterloader2

Why clusterloader2?

ClusterLoader2 is Kubernetes test framework, which can deploy large numbers of various user-defined objects to a cluster

— Documentation OpenShift

Why clusterloader2?

Simple

Kubeconfig

Definition of sest (YAML)

Providers (gke, kubemark, aws, local, etc)

Why clusterloader2?

User-oriented

No Golang

Easy to understand

Why clusterloader2?

Testable

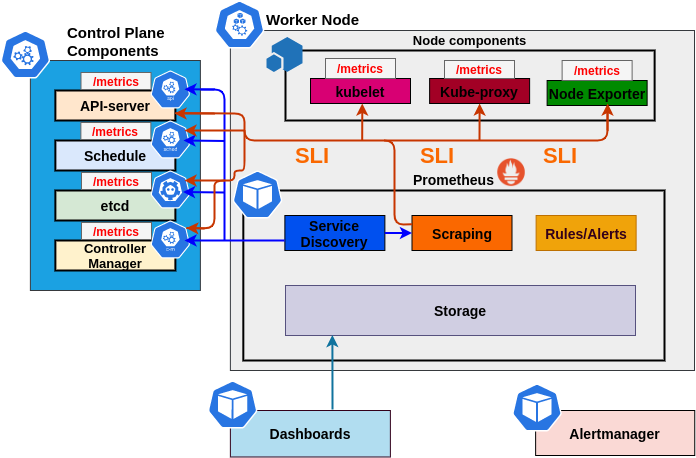

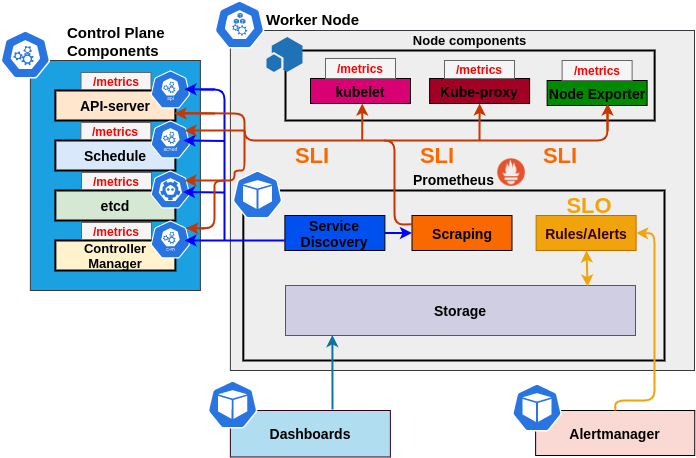

Measurable SLI/SLO

Declarative paradigm

Why clusterloader2?

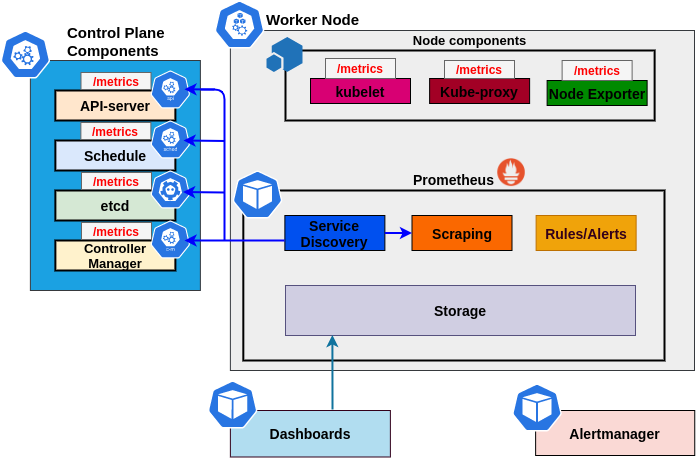

Extra metrics

PodStartupLatency

MemoryProfile

MetricsForE2E

…

Deploy of hollow cluster

Deploy of hollow cluster

RAM | CPU | Network | |||||

Total Capacity | Type | Model | Cores/Threads | Arch | |||

~392GB | DDR4 | Kunpeng 920-4826 | 48/48 | ARM64 | 100Gb/s | ||

Role | CPU | RAM | Disk | Number of nodes | ||||

Real | Hollow | Real | Hollow | Real | Hollow | Real | Hollow | |

Master | 12 | 23GB | 15GB | 3 | ||||

Monitoring | 4 | 15GB | 10GB | 1 | ||||

W-Node | 8 | 40m | 8GB | 20MB | 8GB | - | 50 | 1 |

Deploy of hollow cluster

kubectl create ns kubemarkkubectl create configmap node-configmap \

-n kubemark --from-literal=content.type="test-cluster"kubectl create secret generic kubeconfig \

--type=Opaque --namespace=kubemark \

--from-file=kubelet.kubeconfig=${HOME}/.kube/config \

--from-file=kubeproxy.kubeconfig=${HOME}/.kube/configDeploy of hollow cluster

|

|

Deploy of hollow cluster

kubectl get nodes -l hollow

|

clusterloader2 run

clusterloader2 run

{{$POD_COUNT := DefaultParam .POD_COUNT 100}} (1)

{{$POD_THROUGHPUT := DefaultParam .POD_THROUGHPUT 5}}

{{$CONTAINER_IMAGE := DefaultParam .CONTAINER_IMAGE "k8s.gcr.io/pause:3.1"}}

{{$POD_STARTUP_LATENCY_THRESHOLD := DefaultParam .POD_STARTUP_LATENCY_THRESHOLD "5s"}}

{{$OPERATION_TIMEOUT := DefaultParam .OPERATION_TIMEOUT "15m"}}

name: node-throughput (2)

...

steps:

- measurements: (3)

- Identifier: APIResponsivenessPrometheusSimple

Method: APIResponsivenessPrometheus

...

- phases:

- namespaceRange:

min: 1

max: {{$POD_COUNT}}

replicasPerNamespace: 1

objectBundle:

- basename: latency-pod-rc

objectTemplatePath: rc.yaml (4)

...POD COUNT | THROUGHPUT | IMAGE | LATENCY | OPS TIMEOUT |

1000 | 300 | pause:3.3 | 10s | 15m |

clusterloader2 run

apiVersion: v1

kind: ReplicationController

spec:

replicas: {{.Replicas}}

selector:

name: {{.Name}}

template:

metadata:

labels:

name: {{.Name}}

group: {{.Group}}

spec:

automountServiceAccountToken: false

containers:

- image: {{.Image}}

imagePullPolicy: IfNotPresent

name: {{.Name}}

...clusterloader2 run

|

|

Results of clusterloader2 work

Results of measurements for etcd

Results of measurements for API-server

Deploy of large cluster

Deploy of large cluster

Role | CPU | RAM | Disk | Number of nodes |

Master | 36 | 96GB | 30GB | 3 |

Monitoring | 12 | 80GB | 40GB | 1 |

W-Nodes | 8 | 4GB | 15GB | 200 |

H-Nodes | 40m | 20MB | - | 5000 |

Results of clusterloader2 work

Results of measurements for etcd

Stage | Nodes | RPC rate (req/s) | Memory (MB) | Client Traffic (kB/s) | Disk duration (ms) | Number of resources | ||

in | out | DB fsync | WAL fsync | |||||

Idle | 204 | 60 | 80 | 40 | 300 | 60 | 30 | - |

Idle hollow | 5204 | 600 | 9000 | 185 | 2500 | 63 | 30 | +5000 |

Test | 5000 | 3800 | 9000 | 240 | 4000 | 100 | 40 | +30000 |

Results of measurements for API server

Stage | Nodes | CPU | Memory (MB) | SLI (req/s) | Number of resources | |

READ | WRITE | |||||

Idle | 204 | 1.2 | 1500 | 30 | 43 | - |

Idle hollow | 5204 | 3 | 40000 | 520 | 560 | +5000 |

Test | 5000 | 8 | 40000 | 3500 | 590 | +30000 |

Our experience

Our experience

Real ~200 worker nodes and 1 master

70k out of the box

130k after tuning etcd

Our experience

Real ~200 worker nodes and 3 master

80k out of the box

142k after tuning API-server

Our experience

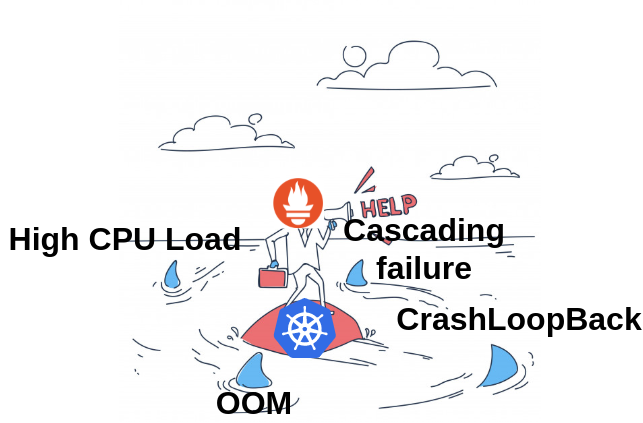

default configuration not suitable for large large scale clusters

etcd

CNI

API-server

memory leaks caused by large number of resources

etcd

API-server

Our experience

Other

|

|

ANY QUESTIONS?

FEEL FREE TO ASK ME

efrikin.github.io/devopsconf2021